Machines able to read our thoughts sounds like something straight out of the pages of a science fiction novel, but that is what a new artificial intelligence (AI) system called DeWave can do.

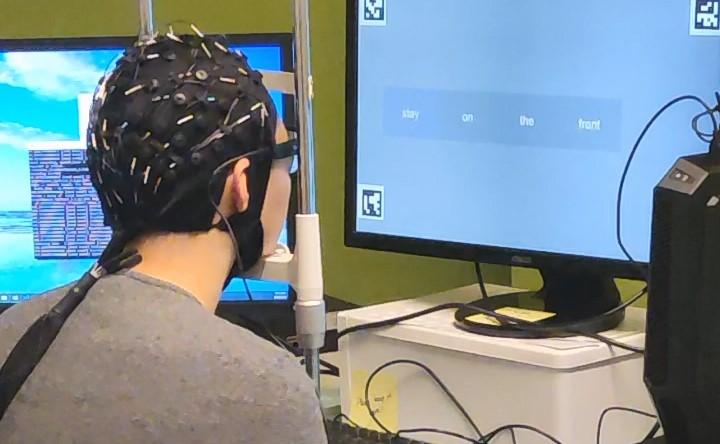

Australian researchers have developed the technology to translate silent thoughts from brain waves into text, using an electroencephalogram (EEG) cap to record neural activity. Scientists at the University of Technology Sydney (UTS) achieved over 40% accuracy in early experiments and their hope is DeWave’s AI could enable communication for those unable to speak or type.

The non-invasive system requires no implants or surgery, unlike Elon Musk’s planned Neuralink chips. It was tested on datasets from subjects reading the text while both brain activity and eye movements were monitored. By matching EEG patterns to eye fixations indicating recognized words, DeWave learned to decode thoughts.

Lead researcher Chin-Teng Lin from UTS stated that DeWave introduces “an innovative approach to neural decoding”. He said in a statement: “This research represents a pioneering effort in translating raw EEG waves directly into language, marking a significant breakthrough in the field.”

Professor Lin continued: “It is the first to incorporate discrete encoding techniques in the brain-to-text translation process, introducing an innovative approach to neural decoding. The integration with large language models is also opening new frontiers in neuroscience and AI.”

DeWave’s AI could one day help paralysis patients

Verbs proved easiest for the AI to identify from neural signals, while concrete nouns were sometimes translated as synonymous word pairs. Researchers suggest that semantically related concepts can produce similar EEG patterns, posing challenges.

With only a snug EEG cap needed to capture input, the technology could one day enable fluid communication for paralyzed patients or direct control over assistive devices. However, work remains to improve the system’s accuracy to around 90% on par with speech recognition.

Combined with rapidly advancing language models, similar brain-computer interfaces could someday enable people to simply think effortlessly to communicate or interact with technology.

Featured Image: UTS

Source link